QNX From The Board Up #12 - mmap(), Part 2

In this second post about memory allocation, we take a look at memory management units (MMUs), and virtual and physical addressing.

Welcome to the blog series "From The Board Up" by Michael Brown. In this series we create a QNX image from scratch and build upon it stepwise, all the while looking deep under the hood to understand what the system is doing at each step.

With QNX for a decade now, Michael works on the QNX kernel and has in-depth knowledge and experience with embedded systems and system architecture.

Previously On...

We started looking at the simplest use case for mmap(), i.e. "Give me some plain old RAM", and used it to implement a very simple version of malloc(). In looking at the pointers we were given by mmap(), we discovered ASLR at work, but adjusted our expectations about how random "random" is:

- Run the same program twice? Random.

- Call

mmap()twice for plain old RAM within the same program twice? Not random.

And, we saw that mmap() returns page-aligned addresses in the virtual address space: the bottom 12 bits were always 0.

I want to look at this a little more and make sure we all remember that day in your OS course that talked about Memory Management Units (MMUs), because the MMU is The Thing we're dealing with when we talk about mmap(); it maps memory accesses from one address to another.

MMU

First of all, let's remember that MMUs are optional. The CPU does not need an MMU to do what it needs to do. They literally used to be sold separately:

If you have the need, PCB real estate, and room to cut into ROI due to a higher BOM cost, sure, go ahead, add an MMU.

And, even today, with all the integrated in "integrated circuit" meaning that most CPUs have an MMU, turning the MMU on is still optional.

Which brings us back to "the need" for an MMU.

Why does someone "need" a device that slows down every single access that the CPU makes, in a world where "That's nice. But, can't it go faster?" is de rigeur.

Two reasons:

- Nobody needs a seatbelt until they need a seatbelt.

- "can" vs. "may".

I claim that both of these are really different views of the "state explosion problem".

So Much Room For Mistakes!

I'll put it in simple and drastic terms: Computers are state machines, and we have to consider:

- the number of possible states, and

- the number of possible state sequences (aka behaviours).

If we have a state machine that can be represented by 2 bits, there are 4 possible states, 0b00, 0b01, 0b10, 0b11. Let's call them A, B, C, and D.

The dimension of time is fundamental too, so there are a large number of state sequences.

- A

- A, B, A, B

- A, B, C, D

- B, C, A, D

- C, C, D, B, A

- A, B, B, A, A, B, B, A, C, A, D, A, B, A, repeat

- ...

Even with just four possible states, the possible sequences is effectively infinite, bounded only by the capability of the CPU to modify state.

Now say we have a computer with 16 GB of memory, i.e. 137,438,953,472 bits. That's a lot of states. A lot of behaviours.

At the beginning of this series, we said the only behaviour acceptable is the one that causes the system to display "Hello, world!" to a UART. i.e. In the HUGE set of possible states and possible state sequences, only a few actually cause the desired outcome to happen.

So, in a world where a computer can do an essentially infinite number of things, what are the chances that your program does exactly the right thing, always? What are the chances that all the programs do exactly the right thing, always?

If you can guarantee that nothing ever goes wrong, or accept the risk and consequences of something going wrong, then, no, an MMU is not required. But otherwise...

MMU - The 25¢ Tour

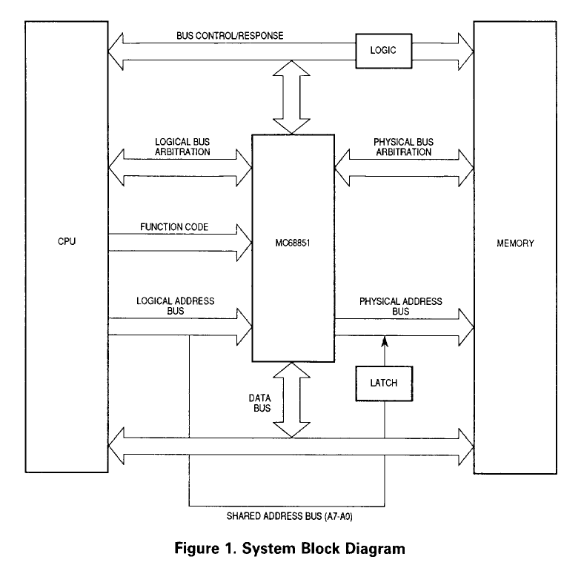

This image is taken from the MC68851 MMU's user manual and, IMHO, explains an MMU quite nicely.

Part 1 - May I?

The MMU sits between the CPU and (almost) everything else and says, for each access (read, write, fetch) that the CPU makes, "Hold up. Should you be doing that?"

If the answer is "Yes", the MMU passes the access request out to the system, and returns the result.

If the answer is "No", the MMU does not pass the access request out to the system, and then tells the CPU, "FYI."

The MMU's configuration has all the "Yes" answers, because, by default, the answer is "No".

(Aside: Well, that's not 100% true. The MMU's configuration has all the answers. If there's no answer in the configuration, the answer is "No." But, there can still be an answer in the configuration that is "No."; it doesn't have to be "Yes.")

The MMU can also be configured to be specific about what you may and may not do. For example, it can be told to allow a read to a specific part of the virtual address space, but forbid any writes. Similarly, you can tell the MMU how to respond if the CPU tries to fetch some code to execute. "This is just data, pal. No executing this."

Who decides "Should you be doing that?", i.e. who decides who can configure the MMU? That's a little bit OS, a little bit system configuration person, and a little bit software-writing person.

I mean, in our simple program we called mmap() to say "Let me access some plain old RAM!", i.e. "Dear QNX Kernel, would you please configure the MMU so that it does not stop me when I try to access some memory?"

Our program initiated the request to configure the MMU, and then QNX configured the MMU such that when our program accessed that memory, the MMU said, "I'll allow it."

The system configurator also plays a role in terms of saying how much memory there is in the system, how much of it can be used to fulfill the answer to "May I please have some plain old RAM?" (System RAM), and (larger topic) configured resource constraints (e.g. RLIMIT_AS) and abilities.

Part 2 - Translation

The MMU also does a "mapping" between the addresses being used by the CPU, and those that the MMU uses when it talks to the rest of the system. This is also known as "address remapping".

e.g. For a simple configuration where the mapping is 1-to-1:

CPU: Please read 1 byte from address 0x1000

MMU: My configuration says you are allowed to perform reads from address 0x1000. I'll allow it.

MMU: I see there is a 1-to-1 mapping. I will therefore use the address 0x1000 for the access.

MMU: Hey, system, please read 1 byte from address 0x1000.

System: Here you go: 42.

MMU: Hey, CPU, a read of 1 byte from address 0x1000 returned 42.

CPU: Thank you. Now please ...

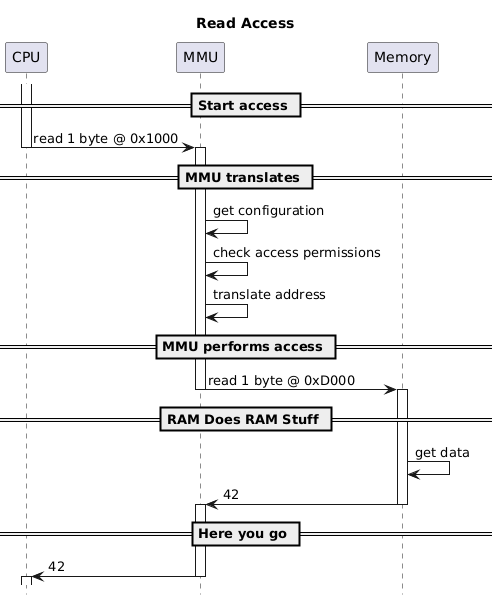

But, you could also configure the MMU to say "If someone performs an access to address 0x1000, turn that into an access to 0xD000."

CPU: Please read 1 byte from address 0x1000

MMU: My configuration says you are allowed to perform reads from address 0x1000. I'll allow it.

MMU: I see there is a mapping for address 0x1000 to address 0xD000. I will therefore use the address 0xD000 for the access.

MMU: Hey, system, please read 1 byte from address 0xD000.

System: Here you go: 42.

MMU: Hey, CPU, a read of 1 byte from address 0x1000 returned 42.

CPU: Thank you. Now please ...

Or, in picture form:

Terminology

Address 0x1000, and address 0xD000. With the above context, we know that the former is used by the CPU and the latter is used on the physical system by the MMU.

But, what if I say without more context "address 0xFFD2". Was it used by the CPU, or the MMU? It's ambiguous.

The address used by the actual physical system, we'll call that a "physical address". The MMU therefore issued a read to physical address 0xD000.

As for the address 0x1000, you can see from the top diagram above that the 68851 calls it a "logical" address. I am going to call it instead a "virtual address". Who is right? I will cite the case of H. Dumpty v. Alice (1871), 4 CLD (Wonderland) and say that the word "means just what I choose it to mean—neither more nor less."

Therefore, for our discussion:

0x1000is a virtual address0xD000is a physical address

But, each side of the MMU can obviously use a whole range of address values. This means we have:

- the physical address space (PAS), i.e. the set of all possible physical addresses.

- the virtual address space (VAS), i.e. the set of all possible virtual addressses.

For example, with QNX 8, we use a 64-bit virtual address space. A virtual address can be anywere from 0x0000'0000'0000'0000 to 0xFFFF'FFFF'FFFF'FFFF.

What happens when you access physical address P? It depends on the system. Could be RAM. Could be a UART register. Could be GPU. It depends.

What happens when you access virtual address V? It depends on:

- If there's a mapping at that virtual address at all.

- Then, if there is a mapping, what physical address is it mapped to?

- And then we're back to: it depends on the system. RAM? UART? GPU?

I'll come back to this again in a later post.

MMU As Seatbelt

Nobody needs an MMU until someone accidentally tries to touch memory they shouldn't be touching.

If you tell the MMU what someone is allowed to access, and how they're allowed to access it, then the MMU can enforce that (within limits).

This is the MMU helping you protect against unintended errors.

MMU As Lock

A malicious actor doesn't care what your code is supposed to do. They recognize that they can exploit the state explosion problem to find a behaviour or set of behaviours that produce what they want to happen.

To mitigate against malicious actors, the MMU also acts to isolate the physical address space.

You can't corrupt data the MMU won't let you write to.

You can't read data the MMU won't let you read from.

(Aside: Yes, rowhammer is an exception to that. And I suppose if you could induce SEUs via exploits, that too.)

(Aside: Yes, Meltdown, Spectre, and their ilk are exceptions.)

More specifically, a well-configured MMU acts to isolate accesses to the physical address space. A perfectly valid MMU configuration is "Do whatever you want. I'll allow it. 1-to-1 mapping vaddr to paddr. Give'r!" but that MMU configuration would be (almost) exactly the same as having no MMU.

Spatial Isolation

No matter the intent – accidental or malicious – behind an access that should not happen, the MMU provides "spatial isolation": isolation of accesses to the physical address space.

Each process has its own virtual address space, i.e. configuration of an MMU, i.e. its own view of the physical address space.

Using virtual address spaces, you can provide spatial isolation by ensuring there is:

- no sharing of physical address ranges, or

- there is sharing only when

- 2 or more processes agree to the terms of sharing.

When we wrote our cheap-n-dirty version of malloc(), we did this:

void*

my_malloc(const size_t size)

{

const int prot = PROT_READ | PROT_WRITE;

const int flags = MAP_PRIVATE | MAP_ANON;

void * const p = mmap(NULL, size, prot, flags, NOFD, 0);

if (MAP_FAILED == p) {

return NULL;

}

return p;

}In the context of spatial isolation, MAP_PRIVATE is the key part here. It means that the range of physical addresses that will be used as a result of the mapping (i.e. the RAM you're being given access to) is currently not given to any process, and will not be given to any other process until you're done with the memory.

Peeking At Physical Addresses

This is an aside, but, when you're working with your software, you might care about the value returned by malloc() (but more likely mmap()), perhaps more so when debugging, but, normally nobody cares much about the physical address corresponding to virtual address.

However, there are cases where this can be useful, so let's take a quick peek at this, and see if it jives with some other things we talked about in earlier postings.

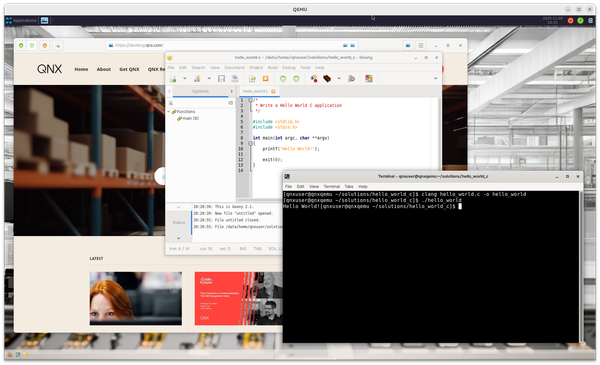

Let's extract the meat and potatoes from our malloc() for a second, and focus on mmap() proper, but with the same use case ("Gimme some RAM"):

const size_t size = 42;

const int prot = PROT_READ | PROT_WRITE;

const int flags = MAP_PRIVATE | MAP_ANON;

void * const vaddr = mmap(NULL, size, prot, flags, NOFD, 0);

assert(MAP_FAILED != vaddr);

printf("Virtual address: 0x%16.16" PRIX64 "\n", (uintptr_t)vaddr);(Note: I like seeing all the zeros in a value, so it's easier to distinguish 0x435345 from 0x43534, hence the "0x%16.16" PRIX64)

Now, let's ask QNX for the physical address that this virtual address gets mapped to, using the function mem_offset() :

off_t paddr; // physical address

int rv = mem_offset(vaddr, NOFD, size, &paddr, NULL);

assert(0 == rv);

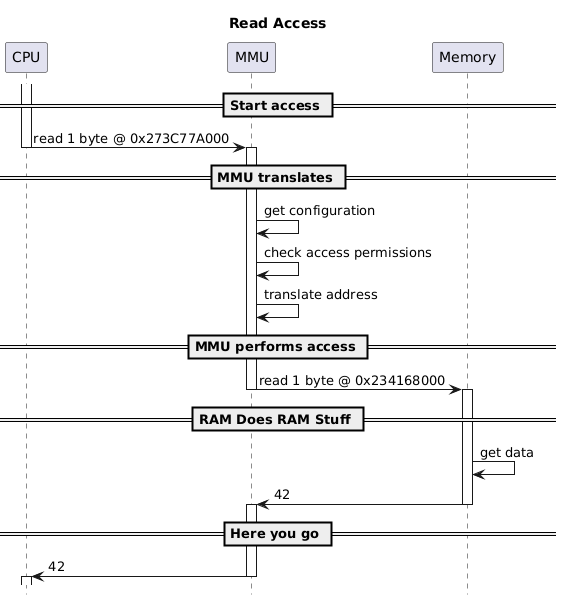

printf("Physical address: 0x%16.16" PRIX64 "\n", paddr);and run it to get

Virtual address: 0x000000273C77A000

Physical address: 0x0000000234168000Does this physical address, 0x0000000234168000, make sense?

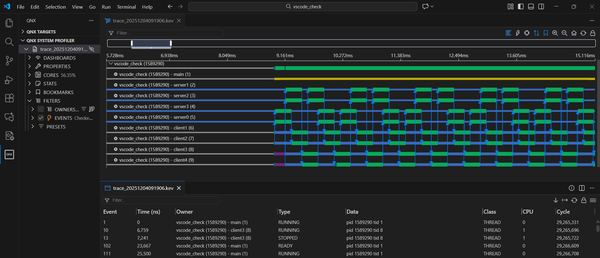

We know it should have been taken from System RAM, so, let's take a look at the System RAM on our QEMU system using QNX's pidin utility to examine the physical address space info (asinfo) portion of the system page:

/ # pidin syspage=asinfo

Header size=0x000000f0, Total Size=0x00000980, Version=2.0, #Cpu=2, Type=4352

Section:asinfo offset:0x000005c0 size:0x00000240 elsize:0x00000018

0000) 0000000000000000-000000000000ffff o:ffff /io

0018) 0000000000000000-000fffffffffffff o:ffff /memory

0030) 0000000000000000-00000000ffffffff o:0018 /memory/below4G

0048) 0000000000000000-0000000000ffffff o:0018 /memory/isa

0060) 0000000006000000-00000000ffefffff o:0018 /memory/device

0078) 00000000fff00000-00000000ffffffff o:0018 /memory/rom

0090) 0000000000000000-000000000009fbff o:0048 /memory/isa/ram

00a8) 0000000000100000-0000000000ffffff o:0048 /memory/isa/ram

00c0) 0000000001000000-0000000005ffffff o:0030 /memory/below4G/ram

00d8) 0000000006000000-00000000bffdffff o:0060 /memory/device/ram

00f0) 0000000100000000-000000023fffffff o:0018 /memory/ram

0108) 00000000000f5a60-00000000000f5a73 o:0018 /memory/acpi_rsdp

0120) 00000000fee00000-00000000fee003ef o:0018 /memory/lapic

0138) 0000000000835348-0000000000e2a043 o:0018 /memory/imagefs

0150) 0000000000800200-0000000000835347 o:0018 /memory/startup

0168) 0000000000835348-0000000000e2a043 o:0018 /memory/bootram

0180) 0000000000001000-000000000009efff o:0090 /memory/isa/ram/sysram

0198) 0000000000100000-000000000010ffff o:00a8 /memory/isa/ram/sysram

01b0) 0000000000117000-0000000000117fff o:00a8 /memory/isa/ram/sysram

01c8) 000000000011f000-0000000000834fff o:00a8 /memory/isa/ram/sysram

01e0) 0000000000e2b000-0000000000ffffff o:00a8 /memory/isa/ram/sysram

01f8) 0000000001000000-0000000005ffffff o:00c0 /memory/below4G/ram/sysram

0210) 0000000006000000-00000000bffdffff o:00d8 /memory/device/ram/sysram

0228) 0000000100000000-0000000239fc2fff o:00f0 /memory/ram/sysramSee that last entry there, at offset 0x0228, with the sysram range [0x0000000100000000, 0x0000000239fc2fff]? Looks like our paddr falls in that range:

End : 0x0000000239fc2fff

paddr: 0x0000000234168000 // our paddr is between the limits of this asinfo's limits

Start: 0x0000000100000000Therefore, to bring it back to what we'd talked about earlier:

Recap

- In a computer that has no MMU, all addresses are "physical addresses".

- The set of possible physical addresses is the "physical address space"

- In a computer with an MMU:

- The CPU uses "virtual addresses", and

- The MMU translates virtual addresses to physical addresses

- The MMU has a configuration:

- Specifies mapping from virtual addresses to physical addresses

- Also specifies which access types are allowed:

- read data, write data, or fetch an instruction for execution

- The set of possible virtual addresses in an MMU configuration is the "virtual address space"

- Each process has its own virtual address space

- i.e. each process has its own MMU configuration

- Therefore all accesses performed by all threads within a process are subject to the process's MMU configuration.

Oh, in a multi-CPU system, each CPU has its own MMU. Bigger topic.

Coming Up...

Next we'll take a closer look at virtual addresses. Stay tuned!