QNX From The Board Up #9 - Kernel Initialization

Take a look at the relationship between the "Kernel" (aka "kernel code") and the "Kernel Process".

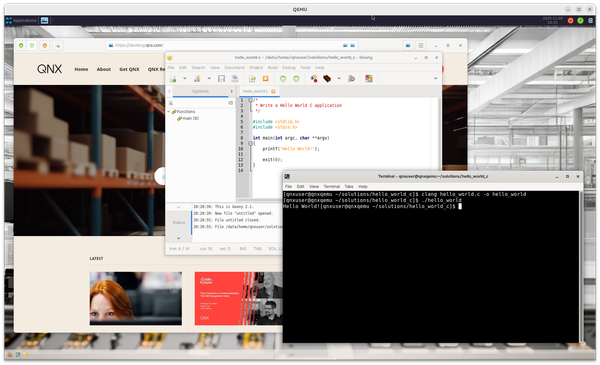

Welcome to the blog series "From The Board Up" by Michael Brown. In this series we create a QNX image from scratch and build upon it stepwise, all the while looking deep under the hood to understand what the system is doing at each step.

With QNX for a decade now, Michael works on the QNX kernel and has in-depth knowledge and experience with embedded systems and system architecture.

Normally I like to explain things in a clean, straight-forward narrative: If A, then B. If B, then C. If that makes sense, and A is obvious, then C must be true. But, that only works if you're familiar with all that and there's an obvious reason why B if A, etc. If I say " Calgary, Fort Macleod, Lethbridge", that makes sense to some people, and some will even say "Why not hang a left at Claresholm over to the 23?", but then I would have to point out that skipping Fort Macleod means you miss out on the Empress Theatre and the Fort Museum, and you do not want to do that. And besides, what's your rush? It's a beautiful part of the country. And, yes, alas, many will say "What's a Calgary?"

The point being, if the start point, end point, and requirements for all points in between don't make sense, then just stating the sequence is informative, but not educational.

So, I hope this doesn't get too Memento-esque, but, to explain kernel initialization, I want to start from a place of familiarity – i.e. the user process, like the ls or nkiss programs we wrote in an earlier posting – explore some of the services provided by the kernel to user code, then start working our way backwards from there. By seeing the places where "Z depends upon Y", and "Y depends upon X", etc., the kernel's initialization sequence becomes – I hope! – a little more sensical.

Kernel's Services Provided To User Processes

In previous posts, we talked about how printf() is just making use of the kernel's messaging functionality to send a message to a server. (More specifically, using MsgSendv() to send a QNX-specific message for POSIX file functionality, with the type _IO_WRITE) Remember? Channels, connections, messages, and all that. And we talked about how this message-based functionality is exposed via kernel calls, which are based upon CPU-specific system calls.

Ok, but what else does user code need from the kernel?

Someone familiar with C might say "malloc()!", i.e. "Give me some memory!", but, that function is actually something implemented in the C library. It is (frequently) possible (and desirable!) to call malloc() and not make a kernel call.

However, if you call it enough times or under the right conditions, that malloc() code makes use of the function mmap(), which is the way (Well, one of the ways, but, primus inter pares) to get memory from the kernel. This function is fundamental, and well worth its own series of articles.

BUT, mmap() does not turn into a kernel call. Well, not directly. More precisely, there is no kernel call number that corresponds to mmap()'s functionality.

We described in a previous post how, in QNX, the system call with the number 11 is the kernel call for MsgSendv(). i.e. when a system call is made with the number 11 in a specific register, that's treated as a request for the kernel functionality described by MsgSendv() in the user docs. There is no such number for mmap().

Instead, mmap() sends a message to a server in the kernel's process, and that message has a specific type and subtype which identifies the message as requesting mmap().

And that means we should step back a bit to review the architecture of the QNX kernel.

Aside: IIUC, in Linux, they have a direct kernel call for mmap(), number 9.

The QNX Microkernel

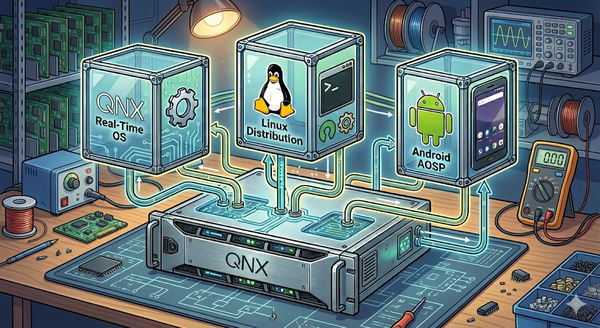

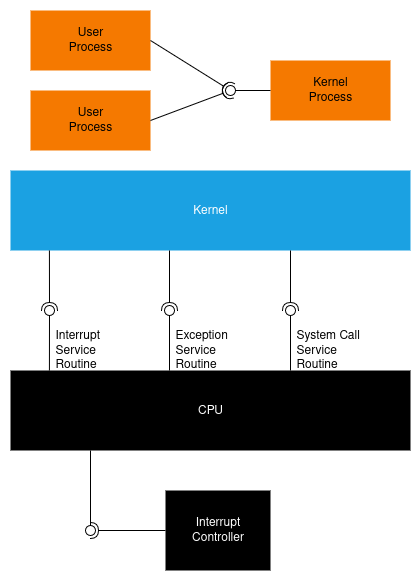

In this image, back in the first From The Board Up post, we showed, in very simple terms,

- hardware vs. software,

- user processes (orange) vs. the QNX kernel (uh, cerulean?).

Let's crack that cerulean box open a bit:

In way-too-simple terms, the cerulean box was split into:

- the cerulean box "Kernel", aka "kernel code", and

- the orange box, "Kernel Process".

Second part first, because the idea of a process should be familiar to most people. When you run a program, a process is created, and the code is executed by a thread (or threads) within that process. Easy peasy. And, the kernel kinda does that too when it creates the kernel process. Tons of asterisks here, but, it's a process, and the threads within can interact with the kernel code just like any other process. (Kinda mostly, but not exactly.)

Ok, now the first part, which is a little more difficult because there are a lot of details here, and people will helpfully say "Don't forget about X" and "Don't forget about Y" and "But that's not entirely true, because Z." so, for the sake of pedagogy, I'm going to describe it in very simple terms. Therefore, please keep in mind, there are metric herds of asterisks floating around like box jellyfish.

To distinguish these two things well, let's step back and talk about something more fundamental: an execution environment.

Execution Environment

Q) When your code is running on a CPU:

- What help can it expect to be available?

- What happens when you call a function?

- What happens if you access a non-general register?

- What memory do you have access to?

- What happens when an interrupt request is delivered to the CPU?

- What happens when an exception (e.g. divide by zero) is generated?

A) It depends on the execution environment, which is the answer to all those questions (and more).

The C specification, aka ISO/IEC 9899, (See also) talks about two execution environments:

- freestanding, and

- hosted.

For the latter, we're typically talking about code running on (hosted by) an operating system. Lots of functionality available. If you can't do it in your code, you can always ask for help from the OS.

For freestanding, this is meant to capture the conditions of code running "bare metal". It's all about your C code, the compiler, the (static) linker, and the CPU(s). There is no OS to help you out. You are on your own, friendo.

And this view of the world makes sense, especially when you look at the world of 1972 when C was being created as a high level language to help make the UNIX operating system more portable. Until then, UNIX had been hand-rolled PDP-11 assembly. The C language was created to (re)write the UNIX kernel (freestanding) and the programs to run on UNIX (hosted).

Given this, we'll say that:

- "the kernel code" is running in a freestanding execution environment, and

- "the kernel process" is running in a hosted execution environment.

Aside: In most other kernels "the kernel process" doesn't exist. That's what makes QNX a microkernel.

And, in a nod to all those asterisks and their venom-containing tentacles floating around:

- "freestanding execution environment" does not mean that there are no services available, and

- the hosted execution environment created for the kernel isn't exactly the same as the hosted execution environment given to the other processes that run on QNX.

Kernel Services - The Kernel

The functionality available via kernel calls, and therefore the "kernel code" consists of:

- message-based IPC

- channels, connections, messages, pulses, sigevents

- signals

- threads

- lifetime management

- synchronization

- mutexes, condition variables, semaphores, barriers

- scheduling

- interrupts

- clocks

- software timers

- message queues

- instrumentation (tracing)

There's a lot there, but, only a few things have serious initialization work. We'll come back to this.

Kernel Services - The Kernel Process

We said in "From The Board Up #4" that:

A microkernel architecture keeps user-provided device drivers outside the kernel. That means device drivers go inside processes, and that means a microkernel needs good inter-process communication (IPC). But, it doesn't mean there can't be a few QNX-provided (trusted) resource managers inside a process.

"a few QNX-provided (trusted) resource managers inside a process" is referring to the kernel process. This process goes by many names, but, for this series, I'll call it the kernel process, or the kernel's process.

We talked about how one resource manager is responsible for /dev/text, and that (in our QEMU configuration) it interacts with an 8250-compatible UART (via startup-provided callouts). This resource manager runs within the kernel process.

When we were playing with our minimal config and home-grown ls, we saw all the files under /dev and /proc, and the files in the image in /proc/boot (Image File System). All these resource managers are running in the kernel process.

So, part of the initialization of the kernel process means firing up these resource managers. But, these resource managers do things that assume kernel calls are working. In fact, who created the kernel process?

And that's why the kernel process creation and initialization happens after the kernel code initializes itself: the kernel process depends on kernel code being initialized.

The Kernel Process - The Other Servers

It's easy to talk about the kernel process's resource managers because we saw the files they exposed to the system when we ran our very simple ls from our very simple command interpreter process (shell), namely nkiss.

But, there are other servers in the kernel process too. And that brings us back to mmap() sending a message of a particular type and subtype to the kernel process.

There are a few of these message-based servers in the kernel process, of which the most noteworthy ones are:

- System Manager,

- Process Manager, and

- Memory Manager.

Can you figure out which one mmap() – the function which maps memory into a process – sends a message to?

Remember how our nkiss shell ran an executable by calling posix_spawnp() to create a new process? Guess which server in the kernel process is sent a message when you call posix_spawnp()?

Can you guess which server provides services that have system-wide impacts? e.g. rebooting the system using sysmgr_reboot().

Each server has its own message type and subtypes. I said earlier that there's "no direct kernel call for mmap()"; mmap() makes a kernel call, but, it's MsgSend(), (MsgSendnc_r(), to be specific), to send a message to the Memory Manager server in the kernel's process.

Sending Messages? How? And To Whom?

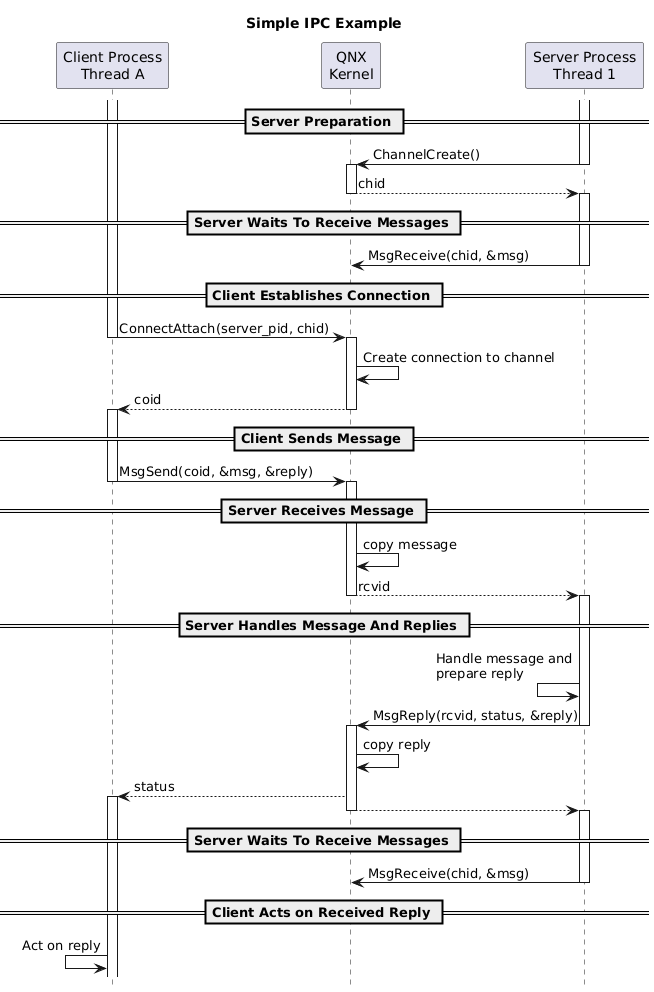

In From The Board Up - #7 Part 1 we spent a couple postings talking about QNX's message-based IPC and how

- A server creates a channel, then

- one or more threads in the server block on the channel, waiting to receive messages.

- A client creates a connection to a channel,

- sends a message to the server on the connection,

- a server thread receives the message on the channel,

- responds to the client, then

- goes back to the channel to

MsgReceive()more messages (or pulses).

But we haven't said exactly how the first part – setting up channels and connections – happens for the user processes and the kernel process.

Long story short:

- The kernel process creates a single public channel as part of its initialization,

- All processes are auto-magically given a connection to that channel by the kernel when a process is created, and

- All the kernel process's message-based servers receive messages on that channel.

And, all the kernel process's resource managers (which are also technically message-based servers, except the messages are the QNX-specific POSIX-file-related messages) receive messages (and pulses) on that channel too. More specifically, the kernel process has a pool of threads that are dedicated to receiving and processing messages to this channel. Based on the message received, a thread will do the work appropriate for that message.

For example, a thread in the kernel process might receive a message to do a mmap(), and then execute Memory Manager code, then, after finishing that and returning to receive messages on the channel, receive a message to do the work to read some bytes from /proc/config, and then execute the resource manager code for that file.

Just to recap: the initialization the kernel process has to:

- create a public channel (

ChannelCreate()),- NOTE: It also creates a side-channel connection to this channel for itself

- fire up the resource managers (

/dev/text, IFS, etc.), - fire up the message-based servers (Memory Manager, etc.),

- create a pool of threads that,

- block until they receive messages or pulses on the channel.

Back To The Kernel

We've glossed over the fact that the kernel process exists, without even getting into its genesis. There must be some moment of fiat lux process.

Above we said that the usual way to create a process, e.g. posix_spawn(), is to send a message to the Process Manager, which is a message-based server in the kernel process. But, we can't send a message to the Process Manager in the kernel process if the kernel process doesn't exist.

The only solution here is for the kernel to create the kernel process from scratch, using artisanal methods, with the freshest herbs and spices. Really, it just means:

- grabbing some RAM for a

struct, - populating that struct with all the right data, and

- then create some threads to run in it.

That sounds simple enough, but, again, I glossed over a couple things:

- "grabbing some RAM"

- "create a thread"

"Grabbing some RAM" sounds like mmap() which is serviced by the Memory Manager in the kernel process, and "create a thread" is, we pointed out above, done with a kernel call.

Creating a thread means that:

- everything needed for a thread to run in a process is initialized, and

- kernel calls are all set up.

Let's talk about grabbing some RAM first.

System RAM

RAM, like oak, is nice. Programs love it. Computers have it, but, rarely ever enough.

One job of the operating system is to manage which programs have access to what memory regions, and, to make sure that no two programs have access to the same regions of RAM (unless they explicitly agree to it). (aka 'spatial isolation', i.e. restrict / isolate accesses to the physical address space)

The Virtual Memory Manager (VMM) has this role.

But, there's a small problem: To manage a resource requires memory. How can you get memory to manage a resource when the resource you're managing is memory?

Simple. Just grab a little bit for yourself at the start.

How does the QNX VMM know what memory on the computer needs to be managed? Since this is system-specific information, it asks the system page, specifically the "physical address space information" (asinfo) section of the system page. Like (almost) everything else in the system page, this is populated by the startup.

If we use the pidin utility on our QEMU-based system to query the asinfo section of the system page, we see something like this:

/proc/boot/pidin syspage=asinfo

Header size=0x000000f0, Total Size=0x00000910, Version=2.0, #Cpu=1, Type=4352

Section:asinfo offset:0x00000580 size:0x00000210 elsize:0x00000018

0000) 0000000000000000-000000000000ffff o:ffff /io

0018) 0000000000000000-000fffffffffffff o:ffff /memory

0030) 0000000000000000-00000000ffffffff o:0018 /memory/below4G

0048) 0000000000000000-0000000000ffffff o:0018 /memory/isa

0060) 0000000006000000-00000000ffefffff o:0018 /memory/device

0078) 00000000fff00000-00000000ffffffff o:0018 /memory/rom

0090) 0000000000000000-000000000009fbff o:0048 /memory/isa/ram

00a8) 0000000000100000-0000000000ffffff o:0048 /memory/isa/ram

00c0) 0000000001000000-0000000005ffffff o:0030 /memory/below4G/ram

00d8) 0000000006000000-0000000007fdffff o:0060 /memory/device/ram

00f0) 00000000000f5ad0-00000000000f5ae3 o:0018 /memory/acpi_rsdp

0108) 00000000fee00000-00000000fee003ef o:0018 /memory/lapic

0120) 0000000000435348-0000000000644043 o:0018 /memory/imagefs

0138) 0000000000400200-0000000000435347 o:0018 /memory/startup

0150) 0000000000435348-0000000000644043 o:0018 /memory/bootram

0168) 0000000000001000-000000000009efff o:0090 /memory/isa/ram/sysram

0180) 0000000000100000-000000000010ffff o:00a8 /memory/isa/ram/sysram

0198) 0000000000117000-0000000000117fff o:00a8 /memory/isa/ram/sysram

01b0) 000000000011f000-0000000000434fff o:00a8 /memory/isa/ram/sysram

01c8) 0000000000645000-0000000000ffffff o:00a8 /memory/isa/ram/sysram

01e0) 0000000001000000-0000000005e78fff o:00c0 /memory/below4G/ram/sysram

01f8) 0000000006000000-0000000007fdffff o:00d8 /memory/device/ram/sysramAnything described as "sysram" is (surprise!) used as System RAM, i.e. it's RAM for general use by the system.

The VMM, as part of its initialization, walks through the asinfo, takes note of it all, then sets aside some of that System RAM for itself to manage all this information.

Quick recap of that: there's a VMM, and it needs to be initialized before anything can grab any RAM.

Even the kernel itself needs RAM, therefore, the VMM is the first thing that happens in the kernel initialization.

Almost. There are some command-line parameters to procnto-smp-instr that affect the behaviour of the VMM (viz. -m), so, all of those parameters those have to be parsed (getopted) first.

Alright then, parse the command-line parameters, then initialize the VMM.

Ready For Threads

Back to this now:

- everything in the kernel needed for a thread to run in a process is initialized, and

- kernel calls are all set up.

This can be better stated as: do the rest of the work necessary to support the very first thread, which can request anything of the kernel that the kernel supports.

Let's revisit those things you can do via the kernel calls again:

- message-based IPC

- channels, connections, messages, pulses, sigevents

- signals

- threads

- lifetime management

- synchronization

- mutexes, condition variables, semaphores, barriers

- scheduling

- interrupts

- clocks

- software timers

- message queues

- custom kernel calls

- instrumentation (tracing)

Most of these can be hand-wavingly described / dismissed as "initialize some data structures", but, a few of them are very interesting and worth a deeper look in later articles. For now though, a quick description of a few things.

Interrupts

Huge, huge, HUGE topic, but, long story short: when a chunk of hardware (aka an interrupt source) delivers an interrupt request (IRQ) to a CPU, basically saying "Hey! Something happened and I need software to take care of this!", (I'm gonna plug my nose and say "Hardware-based push notification") the CPU will interrupt the currently executing code by putting it aside, and jumping to code -- an interrupt service routine (ISR) -- ... somewhere.

The kernel must tell the CPU "If someone interrupts you, jump to my ISR here."

InterruptAttach()), although they are related. Again, huge topic.Unfortunately, there are a couple snags here:

- Each CPU family has its own way of identifying and dealing with interrupts.

- Each target has its own set of interrupt sources. Many may overlap, but, it depends.

For the former, the QNX kernel says "I got this." and does the configuration at the CPU. For the latter, the QNX kernel relies upon the interrupt info (intrinfo) in the system page.

But, take note that this approach of "Hey, CPU! When X happens, start executing here at address Y" is a common one. It's used with

- interrupts,

- exceptions (Exception Service Routine, aka Exception handler),

- kernel calls (Kernel Call Service Routine, aka Kernel Call handler),

- and heaps of other things.

Kernel Calls

Remember how system calls are used for kernel calls? During initialization, the kernel tells the CPU where to jump when someone makes a system call.

Make No Mistakes

One thing that deserves attention is exceptions.

Did you divide a number by zero? Did you try to execute a CPU instruction that is not a valid instruction? If you did, you are exceptional, and the CPU will say "This code needs adult supervision!" and jump somewhere. During initialization, the kernel tells the CPU where to jump when an exception happens, saying "I'm an adult".

We should probably do that before we get into some serious kernel code, like the VMM.

Wait wait wait

I've glossed over a very important point: CPU, singular? What about CPUs, plural?

CPUs, Plural

Other than the Commodore 64 on display at the Museum of Science and Tech, single-CPU systems are fairly rare these days. Therefore, the product managers at QNX have insisted, and continue to insist, that we support systems with more than one CPU. As of QNX 8, we support systems with up to (and including) 64 CPUs.

Normally, when a system is powered on, one of the CPUs (aka the Boot Processor (BP)) is chosen to be the one to start executing code, and it is responsible for doing whatever that system deems necessary to cause the other CPUs (aka the Application Processors (APs)) to start executing code.

How to do that is very CPU-specific. Instead of the kernel doing that, the kernel says "Hey, startup, I don't want to deal with these system-specific details. You do that."

There's a QNX-specific protocol defined by the kernel which says how startup must prepare the APs so that the kernel can cause them to start running kernel code (when the kernel code is ready to make that happen).

Therefore, at the point that startup hands control over to the kernel code, all the CPUs to be given to QNX must be powered, and alive, and:

- one CPU will be given by startup over to the QNX kernel code,

- while all the rest are waiting for commands from the kernel code.

And by "waiting", it's really just spinning on an address in memory waiting for a command code. The command code will either be:

- "jump to this address", or

- "Please be quiet and stay out of the way."

The latter is only for debugging and profiling. See the startup -P command-line option.

The Kernel Process

Once the kernel code is ready to handle exceptions, and receive kernel calls, we can start creating the kernel process, and then the first threads to run in it: the idle threads.

Each CPU gets an "idle thread", which is the thread that runs when there's nothing else to do. The scheduler must always have an answer to the question: "Which thread do I put on the CPU now?"

Each idle thread is locked to a specific CPU, and has priority 0, i.e. the lowest priority. This is also a reserved priority: user threads cannot have priority 0.

As each CPU is commanded to start running code, it initializes that CPU, then finishes by asking the scheduler "Please run a thread now", and the idle thread for the current CPU is the only thread that's ready to run.

Normally, in a fully running system the idle thread's job is to say "Shhhhhh" to the CPU, but, at this point in its life, the idle thread has a very important job: commanding the other CPUs to start running their idle threads, until all the CPUs are running their idle threads, at which point the last idle thread running sees that the gang's all here, so the kernel can move on to the next phase of the initialization of the kernel process: getting the servers up and running!

To do this, the last idle thread running creates a thread in the kernel process that is responsible for running the kernel process's main() function.

And now we're back to the point we were before: bringing up the the servers and resource managers in the kernel process.

From The Beginning

Now that we understand where we need to be at the end, and what has to happen before each step, let's back up to the beginning and put this all in sequence. We talked in From The Board Up - #1 that the sequence after turning on the computer, and some computer-specific stuff, is:

- run the startup, then

- kernel initialization, then

- execute user code

Let's expand that. startup hands control of the system on one CPU to the kernel code, and then we:

- parse the procnto command-line options

- initialize the CPU (Boot Processor)

- kernel calls, and exceptions

- initialize the VMM

- initialize the data structures for functionality for kernel calls

- thread scheduler, etc.

- initialize interrupts

- create the kernel process struct from scratch

- create the per-CPU threads and add them to the scheduler:

- idle, ...

- start scheduling threads:

- idle thread on the BP is placed on the CPU, and brings up all the other CPUs (APs) in the system.

- i.e. bring up idle thread on each of the other CPUs

- once all the idle thread are running, create a thread in the kernel process to run the kernel process's

main()

- idle thread on the BP is placed on the CPU, and brings up all the other CPUs (APs) in the system.

- in the kernel process's

main():- create a public channel, and a connection to it

- initialize the message-based servers

- initialize the resource managers

- initialize the thread pool

- one thread starts executing the commands in the IFS's initialization script

- (We used

/proc/boot/initin our example configurations)

- (We used

At this point, we're at "execute user code", i.e. it's all about whatever you configured your system to do".

That's It?

Stepping back, it can all be boiled down to:

- Initialize the kernel code to the point of having a kernel process ready for thread scheduling.

- Temporarily use the idle threads in the kernel process to bring up the other CPUs

- And then they return to being normal idle threads

- Bring up the servers and resource managers in the kernel process

- Start executing commands in the primary IFS's init script, which (except for our "Hello, Stranger!" config) involves creating processes.

Extra Clarification On Terminology

When referring to QNX's message-based IPC, we talked about

- A server that receives a message on a channel with

MsgReceive(). - A client that sends a message over a connection to a channel and receives a reply back with

MsgSend().

Server. Client. Gotcha.

What if the messages being sent and responded to are QNX's messages for POSIX-style file functionality? In this case, the server is called a resource manager, and the client is a client using a file descriptor to talk to a resource manager.

A file descriptor is a connection. But, not all connections are file descriptors.

Mr. Gibbs, a very long-time QNXer, recently and helpfully pointed out in an internal doc review that I myself was being very sloppy in my usage of this terminology. This exact context (kernel process) highlighted the need for specificity.

Coming Up...

We now have a framework upon which we can expand our understanding of

- the kernel code and kernel process in general

- clocks and software timers

- Inter-processor interrupts (IPIs)

- Interrupt service threads (ISTs).

- and more.

But, for the next article, I want to get into mmap() a little more, and show how to implement a simple malloc(). Sure, QNX provides one with the C library, libc.so, but, as Feynman (allegedly) said, "What I cannot create, I do not understand."

From there we can explore mmap(), shared memory, and typed memory.